This is the first in a series of posts where I will be looking at building an example of a comprehensive modular Amazon Connect solution. As usual all code is available on github under a MIT license and contributions are welcomed.

Demo video

Click here for a demo of this modular solution on my channel on YouTube

Introduction

Contact centers serve as vital hubs for customer interactions, making it essential for organizations to streamline their operations, maximize efficiency, and deliver exceptional customer experiences. Amazon Connect, a cloud-based contact center service, has revolutionized the industry with its scalability and flexibility. Traditionally, contact center flows were hard-coded, limiting customization and hindering development speed. However, the emergence of modular flows has transformed the landscape, enabling contact centers to leverage modules as reusable building blocks. In this extensive series of blog posts, we will explore the myriad benefits of adopting a modular Amazon Connect solution, emphasizing their superiority for large contact centers with multiple business units and/or consultancies catering to diverse clients. We will delve into the advantages of faster development, composability, API development for modules, module sharing, and improved Continuous Integration and Continuous Deployment (CICD) practices.

Modular Flows for Consultancies and Large Contact Centers

Consultancies specializing in contact center solutions often cater to diverse clients with unique requirements, and similarly large contact center operating across multiple business units will also face these unique challenges when it comes to managing customer interactions effectively. The adoption of modular flows within Amazon Connect provides significant advantages for these organizations.

-

Reusability and Consistency: Modular flows promote the reuse of common components across different clients. By developing reusable modules for standard call handling processes, contact centers can ensure consistency in customer interactions. This reduces maintenance efforts, guarantees a unified customer experience, and streamlines updates across the organization.

-

Customizability: Modules can allow the customization within defined limits of the experience. Each module can be configured to meet specific client needs, resulting in contact center solutions that align with individual business processes and industry requirements. By leveraging modular flows, consultancies or large contact centers can deliver bespoke solutions without reinventing the wheel for each solution.

-

Time and Cost Savings: Building contact center solutions from scratch for each client is time-consuming and resource-intensive. Modular flows expedite development cycles by allowing consultancies to reuse existing modules and customize them as needed. This approach significantly reduces development effort, lowers costs, and enables consultancies to deliver solutions more efficiently.

-

Scalable and Future-Proof Solutions: Consultancies need to provide solutions that can scale with their clients’ evolving needs. Modular flows offer the flexibility to add or modify modules independently, ensuring solutions remain scalable and adaptable. By developing modular solutions, consultancies future-proof their offerings, enabling clients to stay ahead in a dynamic business landscape.

Faster Development with Modular Flows

In today’s competitive environment, speed-to-market is critical for software development projects. Modular flows offer a range of benefits that accelerate development cycles:

-

Loose Coupling: Modular flows are designed to be loosely coupled, enabling independent development of individual modules. This allows multiple teams to work simultaneously on different modules, reducing development time and enabling parallel progress. Loosely coupled modules also facilitate easier debugging and maintenance, as changes made to one module have minimal impact on others.

-

Code Reusability: Instead of reinventing common functionalities that will be covered by a standard suite of modules, developers can focus on building any unique and specialized modules that are required by the clients. This not only speeds up project delivery times but also ensures consistency, reduces errors, and enhances the overall quality of the solution.

-

Simplified Testing and Maintenance: Modular flows facilitate granular testing, as each module can be tested independently. This approach enables focused quality assurance efforts, reducing overall testing time and allowing for faster identification and resolution of issues. Furthermore, maintenance becomes more straightforward as individual modules can be modified without disrupting the entire system.

Composability and API for Modular Flows

Modular flows introduce the concept of composability, allowing modules to be combined and reused as building blocks to create different contact center solutions. This brings several advantages:

-

Flexible Solution Design: With modular flows, organizations can compose unique contact center solutions by combining different modules to meet specific requirements. By treating modules as interchangeable components, businesses can tailor their solutions to address diverse customer needs and adapt to evolving market trends.

-

Defining Inputs and Outputs: To ensure interoperability between modules, an API can be developed. Each module follows a defined naming convention for its inputs and outputs, facilitating seamless integration with other modules. This standardized approach enables smooth communication and data exchange between modules, enabling the creation of complex contact center solutions.

-

Community Collaboration: Modular flows enable collaboration within a community of developers, allowing for the sharing and contribution of modules. Third parties can develop modules that address specific functionalities, industry verticals, or unique use cases. This collaborative ecosystem fosters innovation, expands the range of available modules, and enriches the overall Amazon Connect ecosystem.

No Lock-In and Independence

Modular flows built within Amazon Connect leverage standard Amazon Connect flows, eliminating any external dependencies. This ensures that organizations are not locked into bespoke custom solutions and can leverage the full capabilities of Amazon Connect. The absence of lock-in offers businesses the freedom to explore alternative solutions without sacrificing their investments or being restricted to a specific management solution.

CICD Implementation with Modular Flows

Continuous Integration and Continuous Deployment (CICD) practices are essential for ensuring the efficient delivery of software solutions. Modular flows align perfectly with CICD principles and enable organizations to streamline their deployment processes:

-

Independent Module Testing: Modular flows facilitate individual module testing as each module can be developed and tested independently. This granularity allows for more focused and efficient testing efforts, reducing the overall testing time and enabling quicker feedback loops.

-

Separate Deployment of Modules: Modular flows support the deployment of individual modules as part of the CICD process. Each module can be deployed separately, minimizing disruption to the entire contact center system. This modular deployment approach ensures seamless integration of new features, bug fixes, and improvements while maintaining the stability of the overall solution.

Solution Design

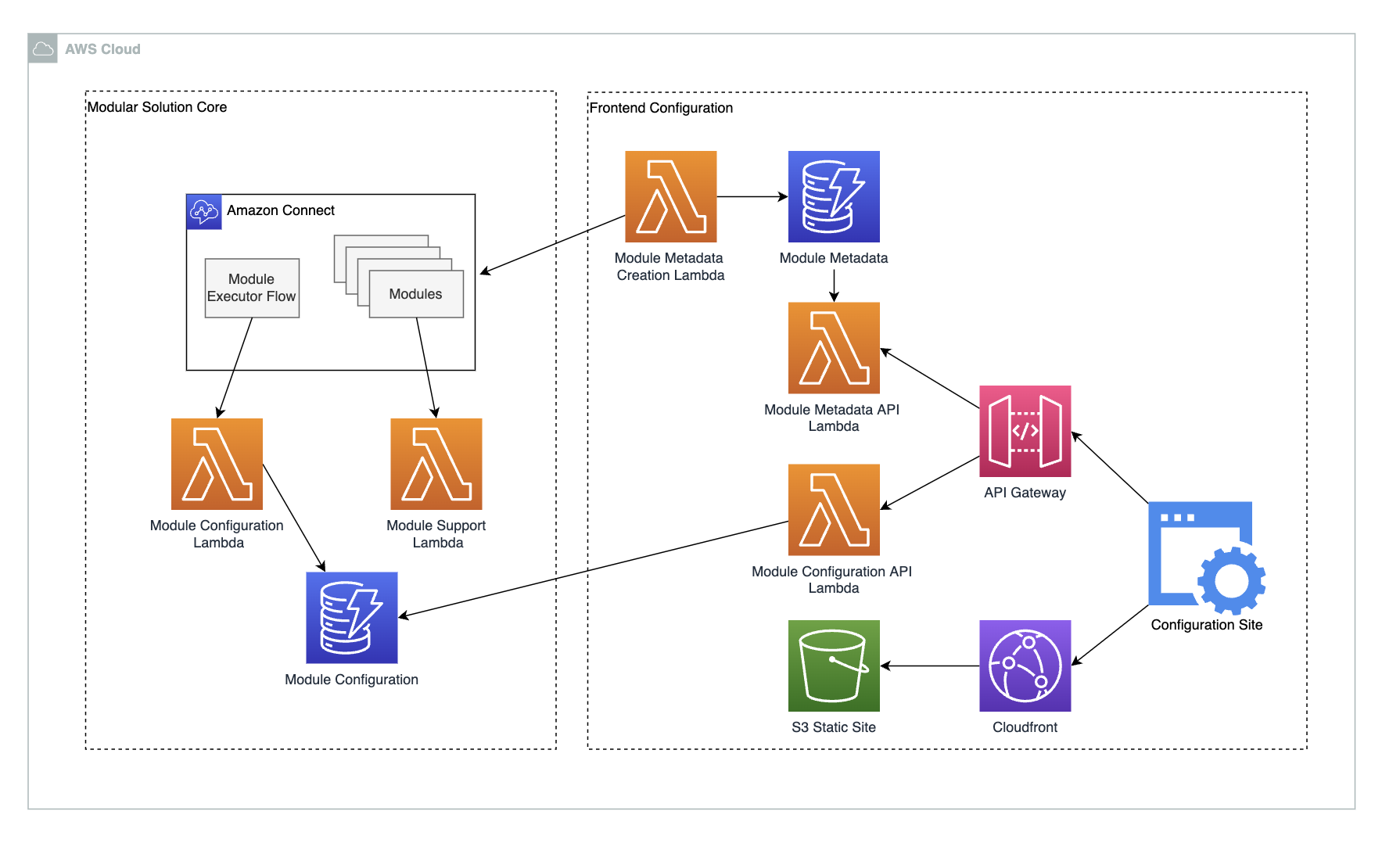

High level architecture

Now we have an overview of the benefits of a more modular Amazon Connect design we are going to start to go through the core of a modular design. We will initially look at the foundational functional side of the solution before in later blog posts we will look at management and CICD solutions. This is the left hand side of the architecture diagram above. Much of the work here will initially be in developing the flows and modules within connect, whereas later we will look at more of the supporting services in AWS to round out the solution.

When designing the core of this solution we need to look at several areas, the building of the modules, how to store and retrieve the configuration for modules, and how to execute the modules within Connect.

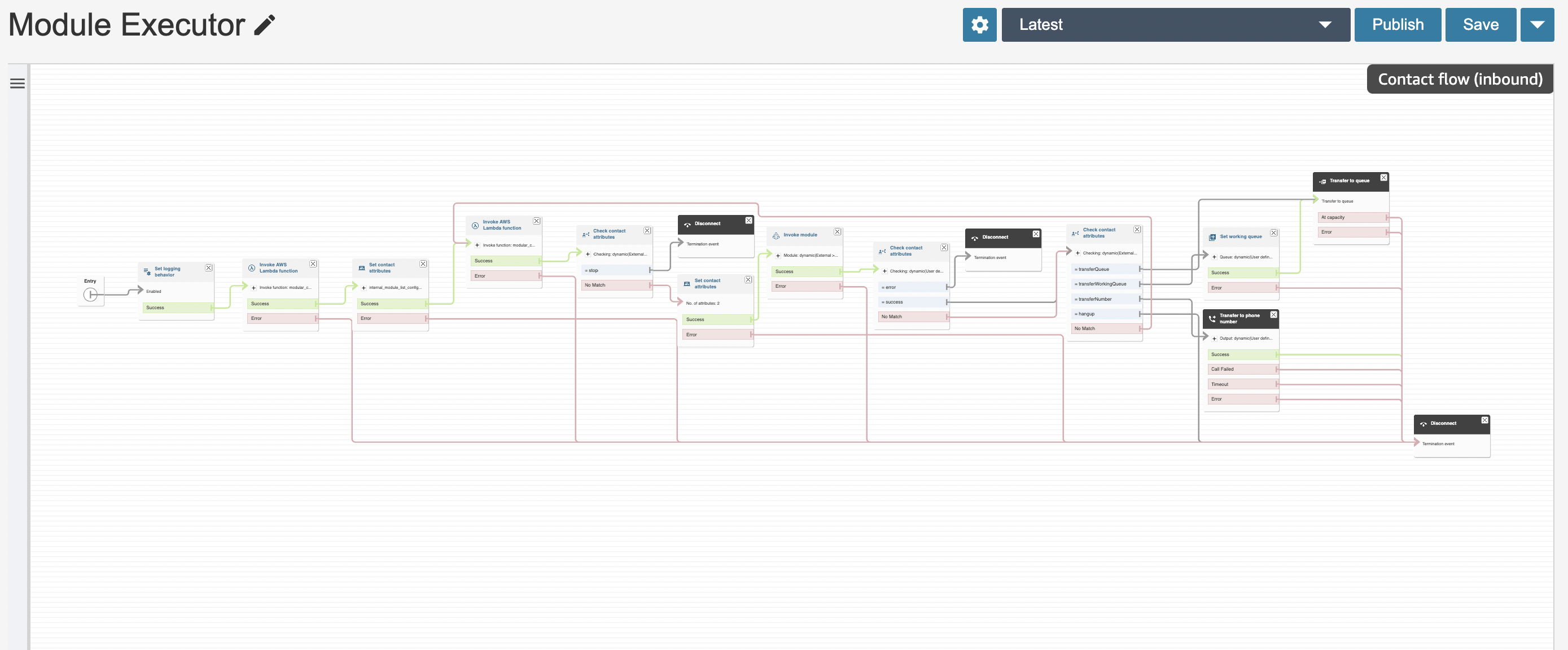

Executor Flow

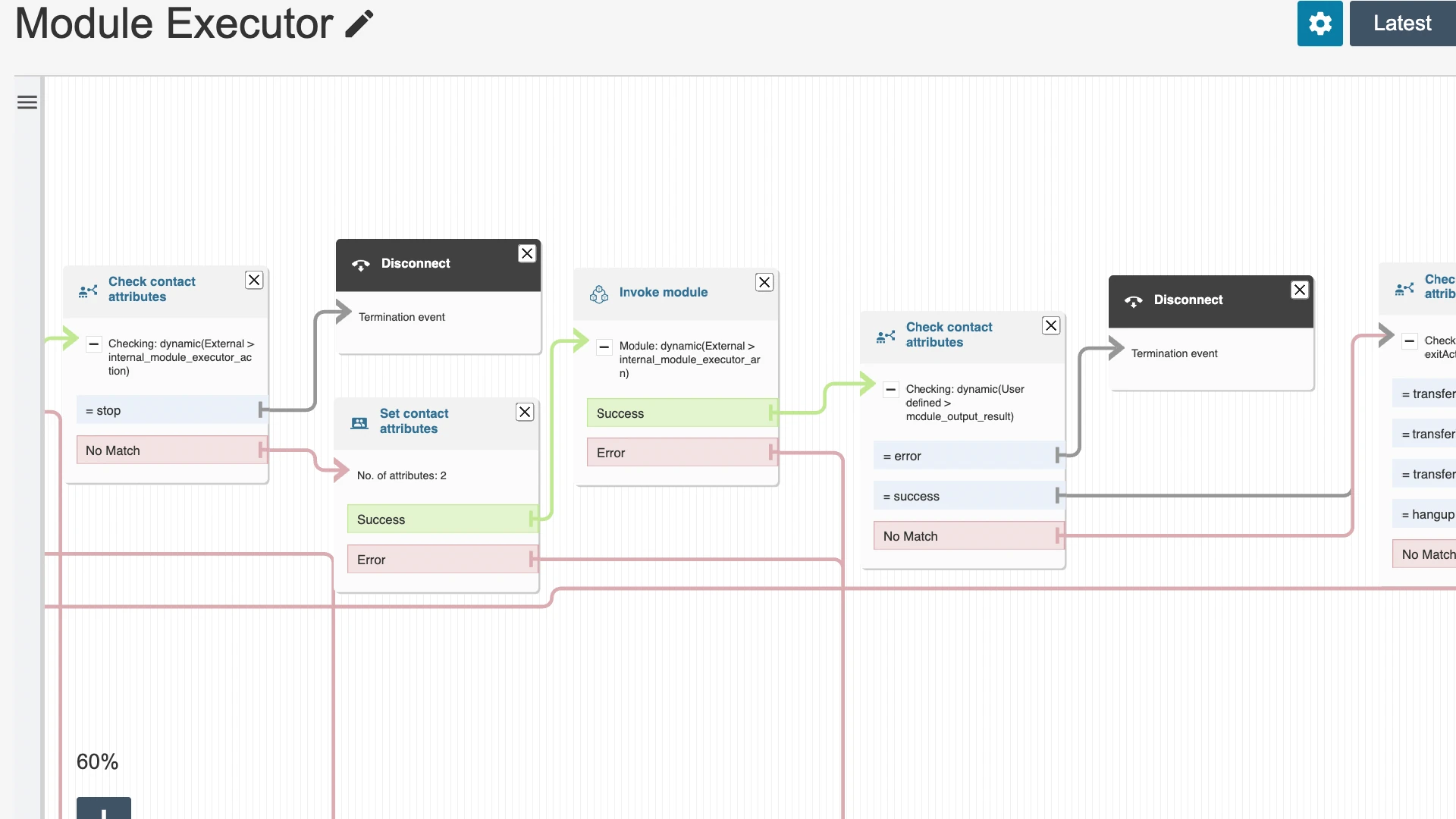

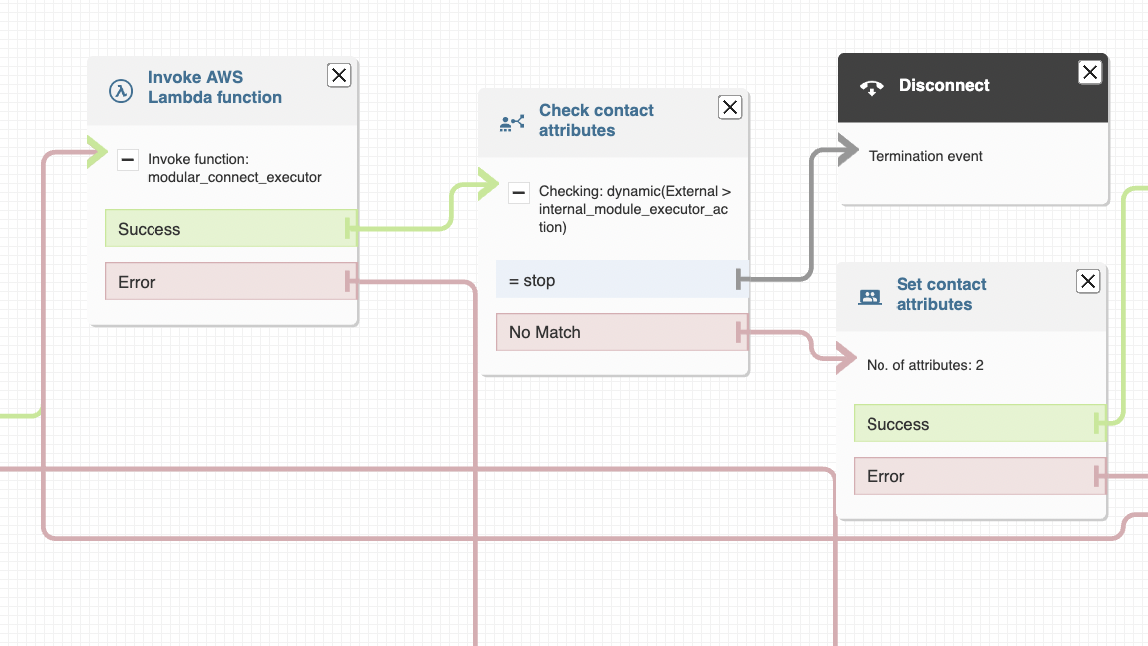

Module executor flow

Lets start with the executor flow. The main executor flow essentially takes a list of modules and calls them in sequence. This is the main flow within the connect solution, there may be other supporting flows for queues ans whispers etc, but all of the actual business logic and functionality is moved into the reusable modules. Looking in more detail at each part of this flow:

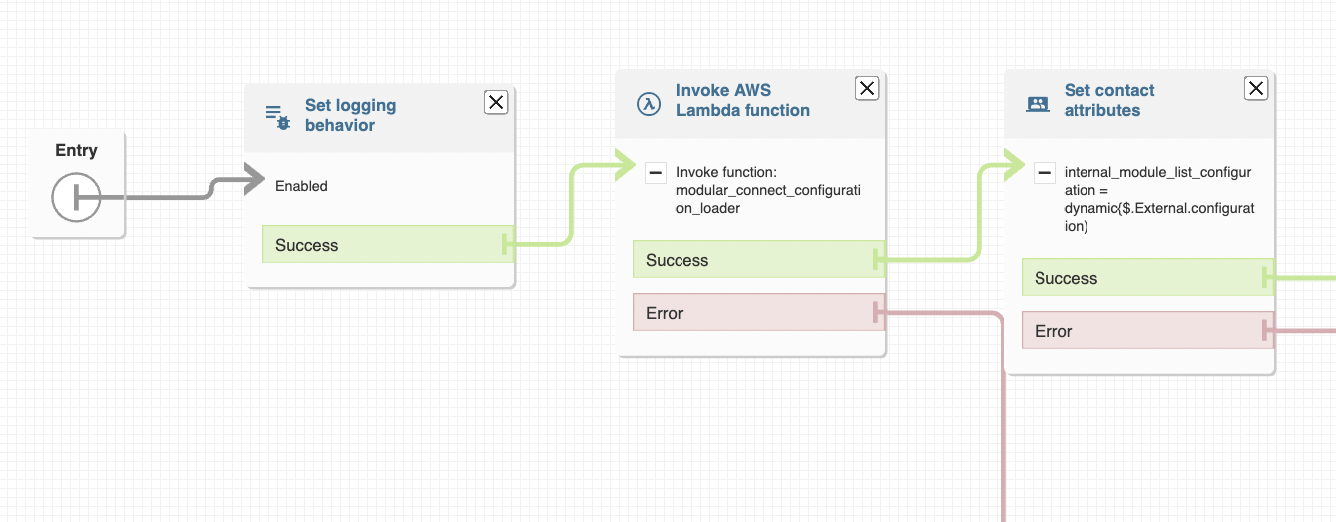

Module executor flow detail

Initially in this flow we need to retrieve the configuration from the database. This configuration describes all of the modules that will be executed and the settings for each of the modules. Fow this blog example will key this information from the dialled number of the contact center.

To retrieve the data we use a simple lambda function such as the one below. This will retrieve configuration from Dynamo and just store the blob of JSON as a string. Why are we storing it as a JSON string? Well since we will be passing this configuration to other lambdas and not actually using it in flow blocks within amazon connect we do not need to parse it within connect. Amazon connect recently introduced JSON support in their lambda functions, allowing the native JSON object to be returned from a lambda call rather than, as below, a string. However this JSON support is not yet fully featured enough to allow us to set attributes with parts of the JSON response, and so in our case we cannot yet use this functionality.

modular_connect_configuration_loader

import { DynamoDBClient, ScanCommand } from '@aws-sdk/client-dynamodb';

import { unmarshall } from '@aws-sdk/util-dynamodb';

export const handler = async (event, context) => {

const client = new DynamoDBClient();

const phoneNumber = event.Details.ContactData.SystemEndpoint.Address;

const params = {

TableName: 'modular_connect_configurations',

FilterExpression: 'phoneNumber = :phoneNumber',

ExpressionAttributeValues: {

':phoneNumber': { S: phoneNumber }

}

};

try {

const command = new ScanCommand(params);

const response = await client.send(command);

if (response.Items && response.Items.length > 0) {

return {

"configuration": JSON.stringify(unmarshall(response.Items[0]))

}

} else {

console.log('Item not found.');

return null;

}

} catch (error) {

console.error('Error retrieving item:', error);

throw error;

}

};

The response of this lambda function is an attribute called configuration that was looked up by the dialled number. The contents of the configuration would be like below:

{

"id": "3311f720-7a45-4170-8db6-af0812504760",

"name": "Example Call Center",

"phoneNumber": "+6465909655",

"modules": [

{

"id": "7ae76d80-9274-4129-8f00-c1640b805bc3",

"flowid": "0a0e2425-8138-40e7-860f-2df1a90b977c",

"description": "Sets the voice from the list available in this module",

"name": "SetVoice",

"settings": {

"Voice": "Aria"

}

},

{

"id": "a41f977c-d2b7-4444-8248-4ce8b981e9e4",

"flowid": "e8e2efa6-dc0f-4a5c-8ad2-8f37b687d7b1",

"description": "Plays the welcome prompt",

"name": "PlayPrompt",

"settings": {

"PromptType": "text",

"PromptValue": "Welcome to Example Limited"

}

},

{

"id": "528307de-a801-4b5a-9394-572cf3076f52",

"flowid": "73106255-4883-4f57-b02c-42d9f73a2458",

"description": "A DTMF menu to select either a password reset or technical support",

"name": "DTMFMenu",

"settings": {

"1Action": "playMessageHangup",

"1Value": "To reset your password please visit www.example.com/passwordreset. Thank you, good bye.",

"2Action": "setWorkingQueue",

"2Value": "technical_support_queue",

"MenuMessage": "For password resets please press 1. For other technical support press 2."

}

},

{

"id": "1c504dba-15bf-48b3-aef4-310711f5e4ef",

"flowid": "47a5346e-e1a4-4f83-9d10-ba86c88ebccc",

"description": "Plays the estimated wait time",

"exitAction": "transferWorkingQueue",

"name": "EstimatedWaitTime",

"settings": {}

}

]

}

Here we have all of the modules that will be executed as part of this configuration. We will discuss the actual individual module configuration later in this post. For now though we can see fairly clearly what what configuration would be doing, setting a voice, playing a welcome prompt, offering a simple DTMF menu and then playing the estimated wait time before transferring to a queue. The thing to note even at this point is that we are just configuring settings for these modules, the modules them selves can have any amount of standardized behavior. For example the DTMF module configuration above does not need to specify behavior for invalid entries, or time outs etc. All of that is handled by the module in a consistent way.

Module executor flow detail

Now we have retrieved the configuration, we need to start executing the modules that have been defined. To do this we will call our module executor lambda function and pass in the configuration. This is the main lambda that handles all of the logic in determining which module should be executed next. In the example lambda code below it simply iterates through the defined list of modules in our configuration. If there is a module to be executed it sets the details as attributes.

modular_connect_executor

export const handler = async(event) => {

console.log(JSON.stringify(event));

let moduleListConfiguration = JSON.parse(event.Details.Parameters.internal_module_list_configuration);

let lastModuleId = event.Details.Parameters.internal_module_executor_last_module_id;

//the next module to execute

let module = moduleListConfiguration.modules[0];

if(lastModuleId){

module = getNextObject(moduleListConfiguration.modules, lastModuleId);

}

if(module === null){

return {

internal_module_executor_action: "stop"

}

} else {

return {

internal_module_executor_arn: module.arn,

internal_module_executor_settings: JSON.stringify(module.settings),

internal_module_executor_last_module_id: module.id,

internal_module_executor_module_exit_action: module.exitAction,

internal_module_executor_module_exit_action_value: module.exitActionValue,

internal_module_executor_action: "continue",

};

}

};

function getNextObject(jsonArray, objectId) {

const index = jsonArray.findIndex(obj => obj.id === objectId);

if (index !== -1 && index < jsonArray.length - 1) {

return jsonArray[index + 1];

}

return null;

}

Module executor flow detail

Then we invoke the module that the executor lambda has defined we should execute. From the point of view of this executor flow we don’t need to know what the module does, we just invoke it and then wait until it returns. If there was an unrecoverable error in the module we would disconnect the flow at this point, but the normal case is to continue.

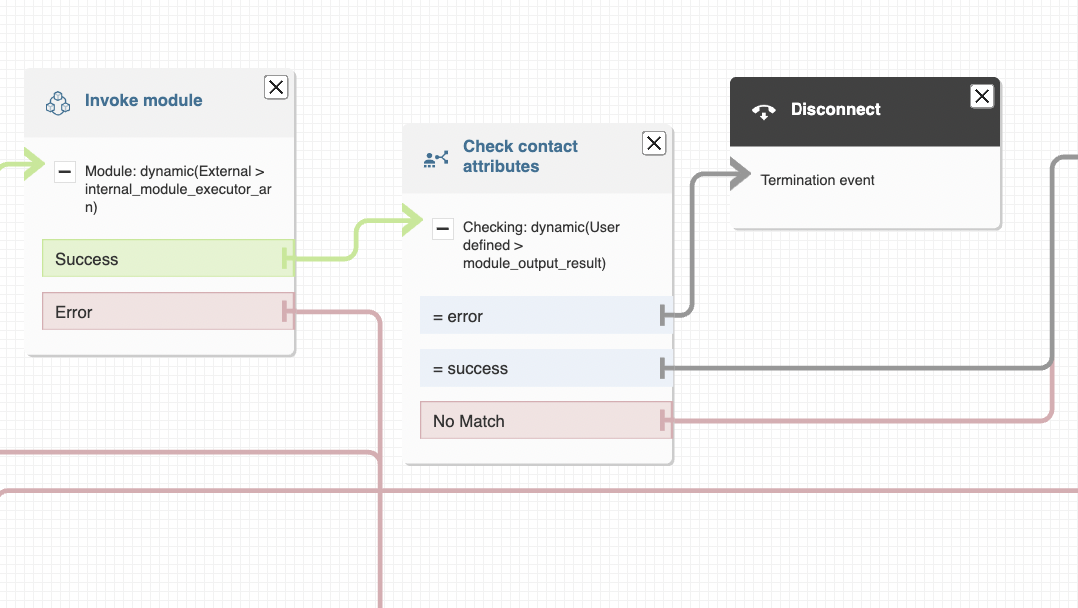

Module executor flow detail

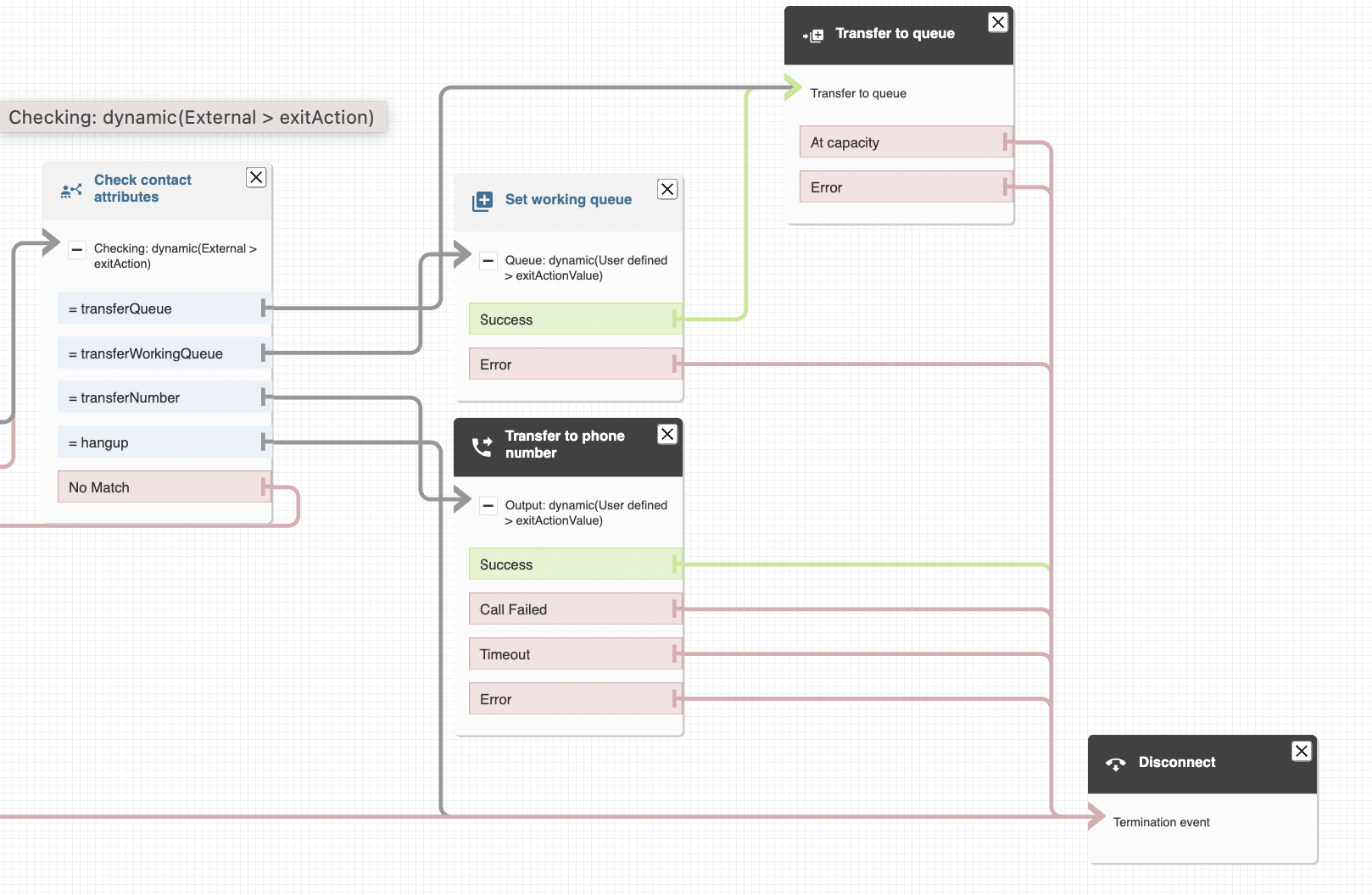

When the module returns we check to see if there was any specific exit behavior we should perform. This is essentially where a module can specify that when it completes there should be some exit behavior. For example it may say that the call should be terminated, or that the call should got to a queue.

If there is no specific exit condition from the module, we will simply loop back to our module executor lambda to find the next module that we want to execute, this is the “no match” connector in the flow above. This is the core of the solution, it will constantly loop around executing the modules until an exit condition is set.

Module Implementation

Now we have the main executor flow running its time to start looking at the modules and how they will be designed.

One of the most important things to consider when implementing any solution at scale is a consistent approach to the configuration and build of any reusable components. In our case we are building reusable modules in Amazon connect. Although these are inherently designed by AWS to be reusable there is no formal pattern for how to build a module and no well defined best practices for the way in which we will be using the,. So the first thing we need to do is designing and documenting a pattern for how we will build modules. This is a module API if you will.

We want to treat a module as a self contained, isolated, and functional piece of software. To do this we need to have some consistent patterns.

Naming conventions for modules

Naming is important so lets define some simple patterns:

- Module names: (“ModuleName”): Simple camel case and descriptive as this will be displayed to operations teams

- Module Settings (“module_setting_name”): these are the settings for the module. When the module is configured in our database, these are the settings that are passed to the module. Think of a module that plays a prompt, the setting would be the text of that prompt.

- Internal attributes (“internal_attribute_name”): these are attributes that are used by the module/flow to maintain is own state, they are not expected to be used anywhere else other than within that module, and can be safely stripped from all contact flow records as they have no relevance to anything except the context of the module while it executes.

- Output attributes (“output_attribute_name”): these are the attributes that the flow may set as an output from the flow. These are things that the module may want to share with other flows. Think of a module that calculates the Estimated Wait Time of a queue, this is a useful thing that other modules may want to know. So this is set as an output attribute from the flow.

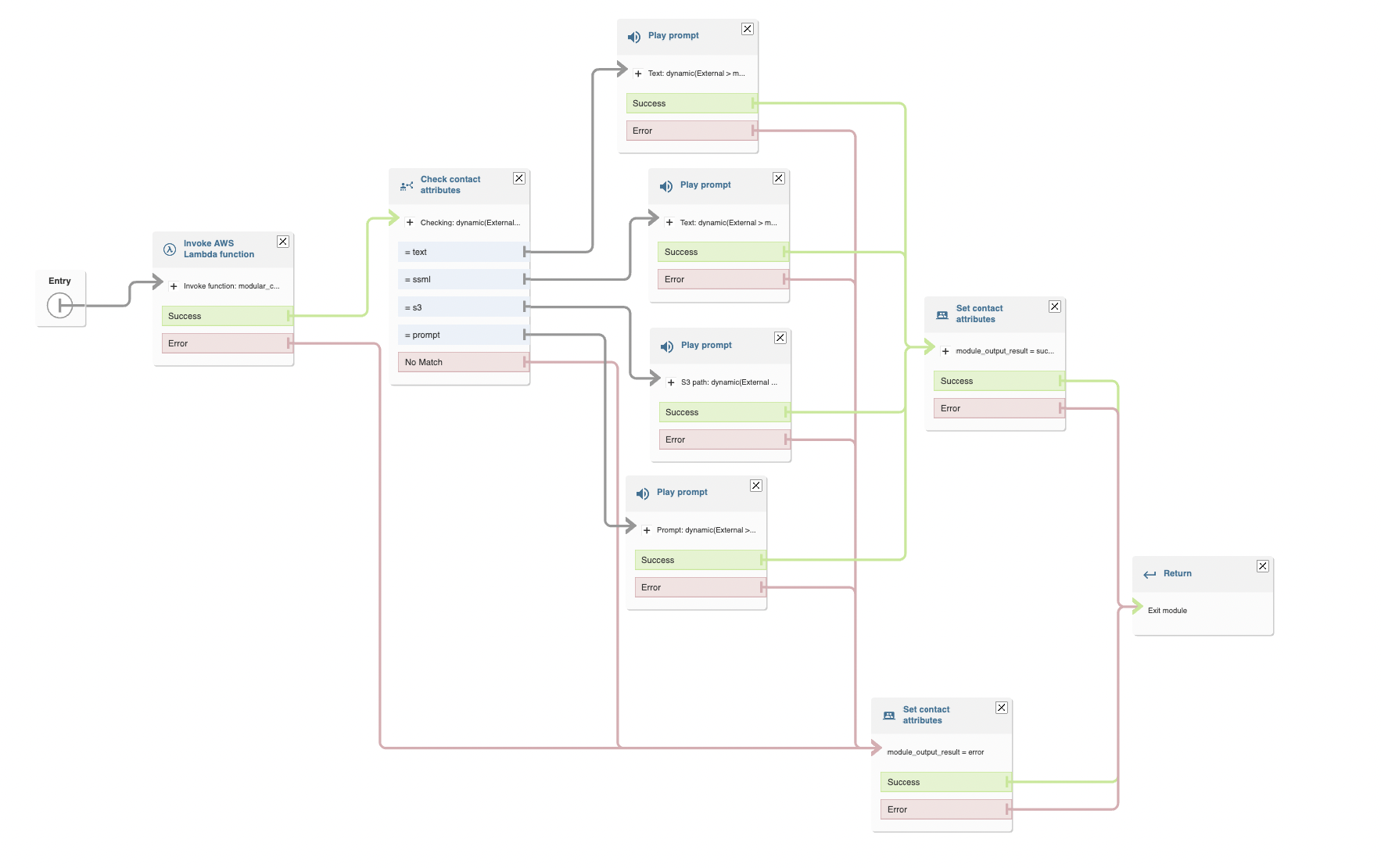

Module design

Module for playing a prompt

If we look at the design of a simple module it will have some distinct parts. At the start of the module we are calling a lambda function to flatten the settings that are passed into the module, this is so we can use all of the settings in the module itself. In our case for consistency reasons we are add a prefix to each setting of “module_setting” so that we can cleanly identify which settings were passed into the module. This will be important later when we are generating descriptors for modules.

The simple lambda code to flatten the settings would look something like this:

modular_connect_json_to_attributes

export const handler = async(event) => {

return addPrefixToKeys(JSON.parse(event.Details.Parameters.jsonString), "module_setting_");

};

function addPrefixToKeys(jsonObject, prefix) {

const result = {};

for (const key in jsonObject) {

if (jsonObject.hasOwnProperty(key)) {

const newKey = `${prefix}${key}`;

result[newKey] = jsonObject[key];

}

}

return result;

}

So now we have the settings in the module we can perform whatever is needed. In this example we are going to play a prompt, and depending on the “type” setting in the module settings we will either play thr prompt as text, SSML, from s3, or from the prompt library. This is a simple module but we could easily augment it with more features and settings, perhaps we want to write out to a special audit log whenever a prompt is played, maybe we want to require that the prompt is only played when a certain condition is met. This is an overly simple example of a module but the idea is hopefully clear, the module can perform a lot of functionality and the settings just decide what it does. This means we have a highly consistent and reusable building block for our contact center.

Conclusion

In this blog post we introduced the idea and benefits from a business perspective around using modules in an Amazon Connect contact center solution. We have started to build some core foundational components execute the modules that had been configured within Dynamo. However as you will have realized there is more that needs to be done, we need a way build the configuration for these modules, a way to describe the configuration and behavior of different call centers or numbers that are called. We will look at this in the next blog post, where we will define a front end to define and manage the modules. It is at that point that the value to an operations team will become even more apparent.