Using Stored Prompts with Lambda to interact with ChatGPT in a functional way

In this blog post, we will explore how to use AWS Lambda functions to interact with ChatGPT, a highly sophisticated conversational AI model developed by OpenAI. Our main task will be to structure predefined prompts stored in an AWS DynamoDB table, which the Lambda function will leverage to call ChatGPT. This design might be akin to using stored procedures in a traditional database environment, except in our case, the stored prompts are natural text-based and used to instigate functional dialogue with the AI.

The stored prompts will be designed to have a particular input and specified output. A user, when performing an action, will need to specify the prompt they want and the input data. The Lambda function then retrieves the appropriate prompt, integrates the input data, interacts with ChatGPT, and finally provides the user with a response.

As there is a new emerging skill, specifically around crafting the best prompts for interacting with a generative AI, using this approach we can define complex stored prompts, and isolate the development of these stored prompts.

Let’s delve deeper into each stage of the process.

AWS Lambda and DynamoDB

AWS Lambda is a serverless computing service that lets you run your code without provisioning or managing servers. We are using Lambda to retrieve data from DynamoDB and to interact with the OpenAI API.

Amazon DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance. We are using this to store all of our stored prompts.

ChatGPT: The AI Conversation Partner

ChatGPT is a language model developed by OpenAI, designed to simulate human-like text conversations. It is based on a transformer architecture, which is particularly effective at understanding the context of a conversation and generating coherent and contextually appropriate responses. The current version as of the time of writing (GPT-4) can generate highly sophisticated and nuanced responses, making it an ideal tool for developing conversational interfaces and AI assistants.

Structuring Predefined Prompts

In our setup, we want to have a set of predefined prompts that act as a sort of API for interacting with ChatGPT. These prompts will be designed to expect a specific type of input and will have a specified output format.

Consider a prompt like “Translate the following English text into Spanish: {input}.” The input would be any English text string, and the output would be the translated Spanish text.

This is an overly simple example just to get the idea behind what we are doing. Perhaps a better example would be a prompt such as:

“Given the following input {input} provide a sentiment score from 0 to 1 where 0 is unhappy and 1 is very happy. Respond only in JSON format in the style of {“confidence”:””}”

In this way we would let the generative AI decide perform some sentiment analysis for us. Of course the entire point of this is that any prompt can be used, extremely complex prompts could be constructed with any number of instructions for the AI.

These prompts will be stored in a DynamoDB table. Each prompt will have a unique key or function name, allowing the Lambda function to quickly and efficiently retrieve the appropriate prompt based on the user’s input.

Here’s a simple example of what this DynamoDB table might look like:

| FunctionName | Prompt | InputType | OutputType |

|---|---|---|---|

| TranslateEngToEsp | “Translate the following English text into Spanish: {input}” | Text | Text |

| Sentiment | “Given the following input {input} provide a sentiment score from 0 to 1 where 0 is unhappy and 1 is very happy. Respond only in JSON format in the style of {“confidence”:””}” | Text | JSON |

| GetTriviaQuestionsCSV | “Write me {input} multiple choice trivia question in CSV format with the column headings “‘question’,’optionA’,’optionB’,’optionC’,’optionD’,’answer’”. Include the column headings as the first line.” | Number | CSV |

| … | … | … | … |

Setting Up the Lambda Function

Our Lambda function will act as the intermediary between the user and ChatGPT. It will take the user’s chosen prompt and input, retrieve the appropriate stored prompt from the DynamoDB table, and insert the input data into the prompt.

The Lambda function will then send this constructed prompt to the ChatGPT API. It will then take the API’s response, and format it for the user.

The basic structure of the function will look something like this (written in Python):

import json

import openai

import boto3

import os

dynamodb = boto3.client('dynamodb')

def lambda_handler(event, context):

# Retrieve the prompt key and input from the user's request

function_name = event['functionName']

user_input = event['input']

# Retrieve the stored prompt from DynamoDB

stored_prompt = dynamodb.get_item(

TableName='openai_prompt_library',

Key={

'functionName': {

'S': function_name

}

}

)['Item']['prompt']['S']

# Insert the user input into the prompt

if not user_input:

chat_input = stored_prompt

else:

chat_input = stored_prompt.format(input=user_input)

openai.api_key = os.environ.get('openai_api_key')

# Call the ChatGPT API

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You only respond with the answer, no additional dialog"},

{"role": "user", "content": chat_input}

]

)

# Format and return the response

chat_output = response['choices'][0]['message']['content']

return chat_output

This function takes a user’s request, identifies the appropriate stored prompt, inserts the user’s input, and interacts with the ChatGPT API. The response from the API is then returned to the user.

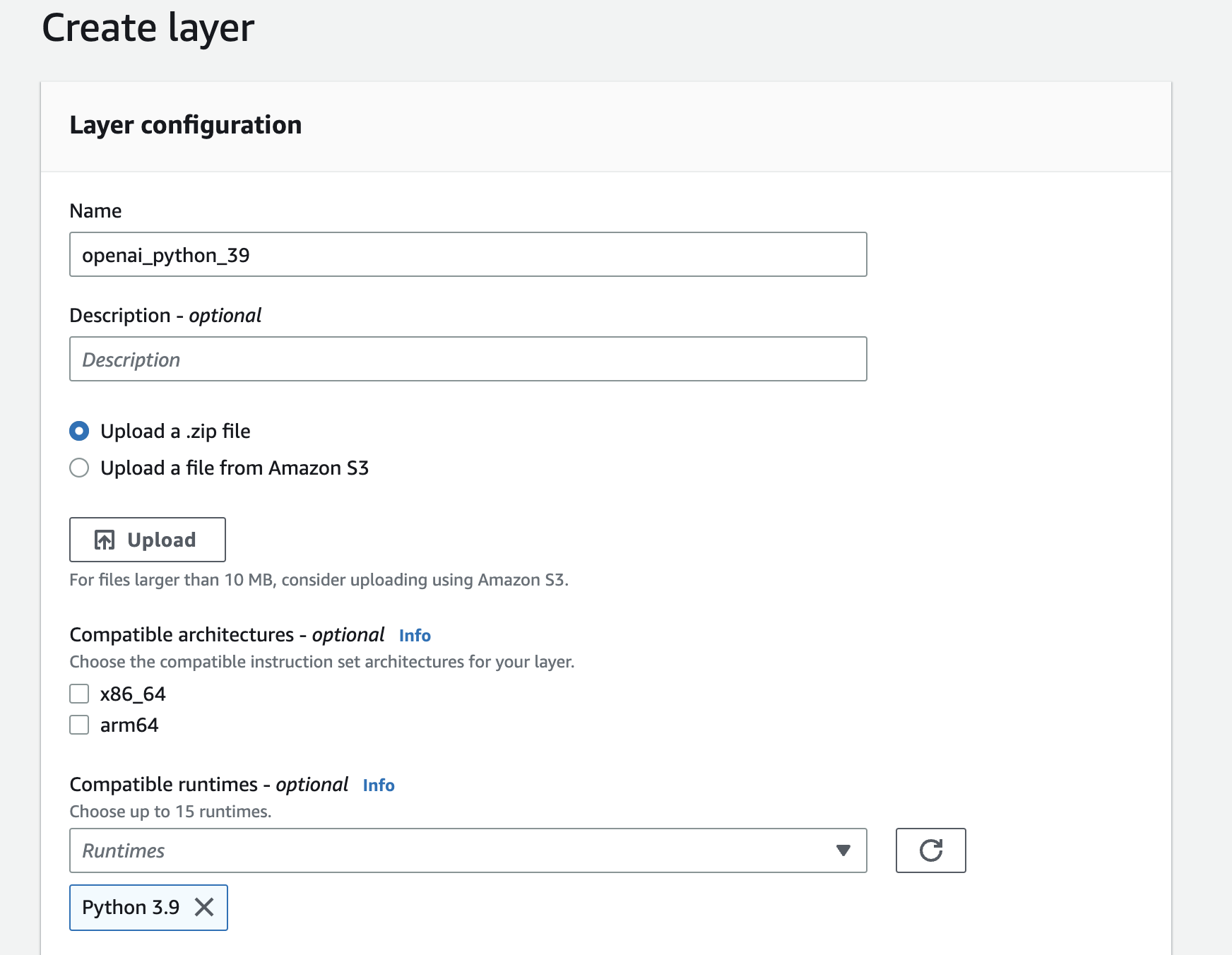

To allow us to be able to call the OpenAI ChatGPT API though we will need to add a layer to our lambda. It is possible to create your own layer but for this blog post we will just use a predefined layer. Helpfully there are some people who have already built a layer that we can use, for example here, or its also included in my github for this blog post. We will be using this python layer for our lambda.

We create a layer in the Lambda console by specifying a name and uploading our layer zip:

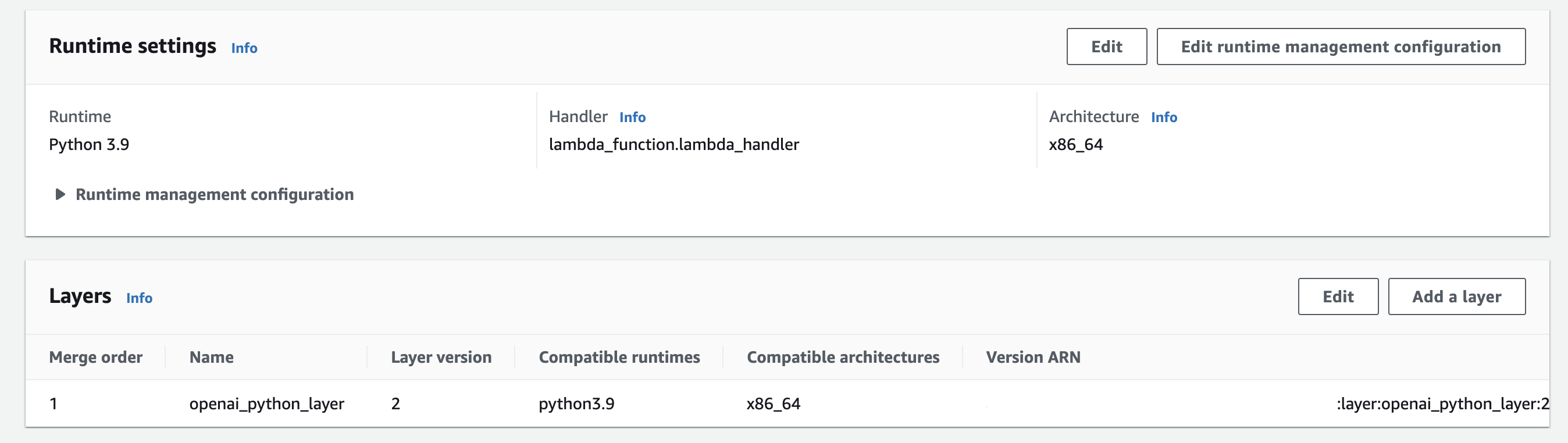

After creating the layer we can add it to our lambda function:

This will allow the lambda function above to make the API call to ChatGPT.

Example method call

To call this lambda function all we need to do is supply it with the name of our Stored Prompt and then we will get the response in a consistent and reliable format:

{

"functionName": "GetTriviaQuestionsCSV",

"input": "4"

}

and the response we receive is:

"question","optionA","optionB","optionC","optionD","answer"

What is the capital of Australia?,"Sydney","Melbourne","Canberra","Brisbane","Canberra"

What is the world's largest ocean?,"Pacific","Atlantic","Indian","Arctic","Pacific"

Which planet is known as the Red Planet?,"Jupiter","Mars","Saturn","Venus","Mars"

What is the smallest country in the world?,"Monaco","Maldives","Vatican City","Nauru","Vatican City"

Whats the point of all this?

By structuring our interaction with ChatGPT in this way, we can create a more structured and predictable conversational interface. We can design prompts to guide the AI in the type of responses we want to generate, and users can choose from a set of available prompts to drive their interactions with the AI.

We are now able to write highly complex prompts, but we will know that they will have a defined output. If we wanted to improve and modify the prompt or make it more efficient later it would have no effect on any of the callers. We have abstracted away the AI layer and now allow it to be used in a functional way.

Conclusion

In this blog post, we’ve taken a deep dive into an exciting way of structuring interactions with the ChatGPT language model. By leveraging AWS Lambda and DynamoDB, we’ve created a system that allows a consistent interaction with the AI, allowing us to embed it in applications where it might not have been considered suitable before.

While this approach comes with its own set of challenges, like the design of effective prompts and the current latency involved with a third party like OpenAI, the potential benefits are immense. We are able to separate the concerns of effective prompt design away from the functional way in which the prompts are used.

When AWS release their own Generative AI infrastructure like Amazon Bedrock and the Amazon Titan models we can make this solution even better, and I will update or write a new blog post when those services are available.

So, what should we build using this… spoiler alert, the next couple of blog posts are going to build on this.